Flying through a narrow gap using neural network: an end-to-end planning and control approach

Abstract

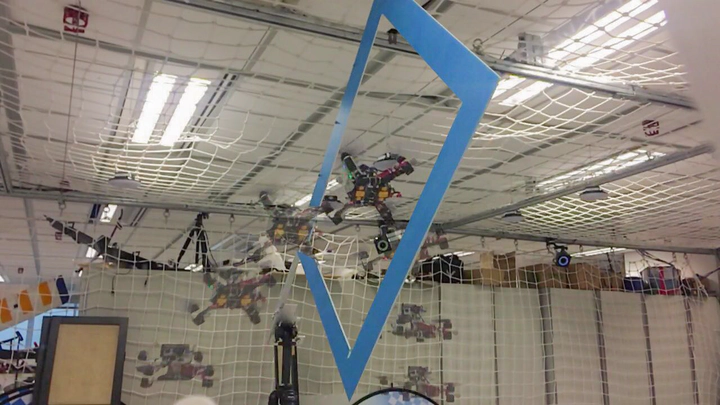

In this paper, we investigate the problem of enabling a drone to fly through a tilted narrow gap, without a traditional planning and control pipeline. To this end, we propose an end-to-end policy network, which imitates from the traditional pipeline and is fine-tuned using reinforcement learning. Unlike previous works which plan dynamical feasible trajectories using motion primitives and track the generated trajectory by a geometric controller, our proposed method is an end-to-end approach which takes the flight scenario as input and directly outputs thrust-attitude control commands for the quadrotor. Key contributions of our paper are: 1) presenting an imitate-reinforce training framework. 2) flying through a narrow gap using an end-to-end policy network, showing that learning based method can also address the highly dynamic control problem as the traditional pipeline does (see attached video https://www.youtube.com/watch?v=-HXARYlhat4 ). 3) propose a robust imitation of an optimal trajectory generator using multilayer perceptrons. 4) show how reinforcement learning can improve the performance of imitation learning, and the potential to achieve higher performance over the model-based method.

Flying through a narrow gap using neural network: an end-to-end planning and control approach

Crossgap_IL_RL is the open-soured project of our IROS_2019 paper “Flying through a narrow gap using neural network: an end-to-end planning and control approach” (our preprint version on arxiv, our video on Youtube) , including some of the training codes, pretrain networks, and simulator (based on Airsim).

Introduction: Our project can be divided into two phases,the imitation learning and reinforcement learning. In the first phase, we train our end-to-end policy network by imitating from a tradition pipeline. In the second phase, we fine-tune our policy network using reinforcement learning to improve the network performance. The framework of our systems is shown as follows.

Fine-tuning network using reinforcement-learning:

Our realworld experiments: