R$^3$LIVE: A Robust, Real-time, RGB-colored, LiDAR-Inertial-Visual tightly-coupled state Estimation and mapping package

Abstract

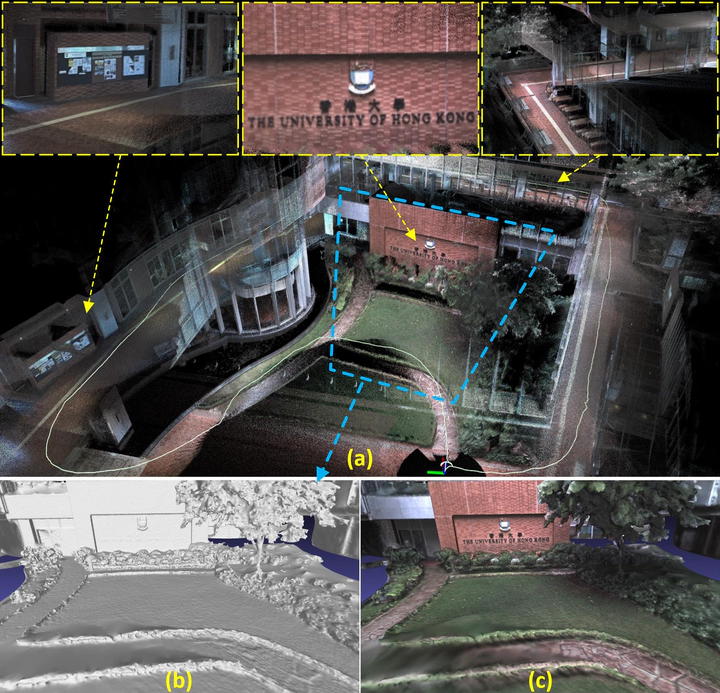

In this paper, we propose a novel LiDAR-Inertial-Visual sensor fusion framework termed R3LIVE, which takes advantage of measurement of LiDAR, inertial, and visual sensors to achieve robust and accurate state estimation. R$^3$LIVE consists of two subsystems, a LiDAR-Inertial odometry (LIO) and a Visual-Inertial odometry (VIO). The LIO subsystem (FAST-LIO) utilizes the measurements from LiDAR and inertial sensors and builds the geometric structure (i.e., the positions of 3D points) of the map. The VIO subsystem uses the data of Visual-Inertial sensors and renders the map’s texture (i.e., the color of 3D points). More specifically, the VIO subsystem fuses the visual data directly and effectively by minimizing the frame-to-map photometric error. The proposed system R3LIVE is developed based on our previous work R$^2$LIVE, with a completely different VIO architecture design. The overall system is able to reconstruct the precise, dense, 3D, RGB-colored maps of the surrounding environment in real-time (see our attached video https://youtu.be/j5fT8NE5fdg). Our experiments show that the resultant system achieves higher robustness and accuracy in state estimation than its current counterparts. To share our findings and make contributions to the community, we open source R$^3$LIVE on our Github: https://github.com/hku-mars/r3live.

1. Introduction

R3LIVE is a novel LiDAR-Inertial-Visual sensor fusion framework, which takes advantage of measurement of LiDAR, inertial, and visual sensors to achieve robust and accurate state estimation. R3LIVE is built upon our previous work R2LIVE, is contained of two subsystems: the LiDAR-inertial odometry (LIO) and the visual-inertial odometry (VIO). The LIO subsystem (FAST-LIO) takes advantage of the measurement from LiDAR and inertial sensors and builds the geometric structure of (i.e. the position of 3D points) global maps. The VIO subsystem utilizes the data of visual-inertial sensors and renders the map’s texture (i.e. the color of 3D points).

1.1 Our accompanying videos

Our accompanying videos are now available on YouTube (click below images to open) and Bilibili1, 2.

1.2 Our associate dataset: R3LIVE-dataset

Our associate dataset R3LIVE-dataset that use for evaluation is also available online. You can access and download our datasets via this Github repository.

1.3 Our open-source hardware design

All of the mechanical modules of our handheld device that use for data collection are designed as FDM printable, with the schematics of the design are also open-sourced in this Github repository.

2. R3LIVE Features

2.1 Strong robustness in various challenging scenarios

R3LIVE is robust enough to work well in various of LiDAR-degenerated scenarios (see following figures):

And even in simultaneously LiDAR degenerated and visual texture-less environments (see Experiment-1 of our paper).

2.2 Real-time RGB maps reconstruction

R3LIVE is able to reconstruct the precise, dense, 3D, RGB-colored maps of surrounding environment in real-time (watch this video).

2.3 Ready for 3D applications

To make R3LIVE more extensible, we also provide a series of offline utilities for reconstructing and texturing meshes, which further reduce the gap between R3LIVE and various 3D applications (watch this video).

3. Acknowledgments

In the development of R3LIVE, we stand on the shoulders of the following repositories:

- R2LIVE: A robust, real-time tightly-coupled multi-sensor fusion package.

- FAST-LIO: A computationally efficient and robust LiDAR-inertial odometry package.

- ikd-Tree: A state-of-art dynamic KD-Tree for 3D kNN search.

- livox_camera_calib: A robust, high accuracy extrinsic calibration tool between high resolution LiDAR (e.g. Livox) and camera in targetless environment.

- LOAM-Livox: A robust LiDAR Odometry and Mapping (LOAM) package for Livox-LiDAR.

- openMVS: A library for computer-vision scientists and especially targeted to the Multi-View Stereo reconstruction community.

- VCGlib: An open source, portable, header-only Visualization and Computer Graphics Library.

- CGAL: A C++ Computational Geometry Algorithms Library.